Olivia Brown and Victoria Helus advocate for AI literacy

Olivia Brown and Victoria Helus never took any artificial intelligence (AI) or machine learning (ML) courses as undergraduates. Brown only completed one ML course in graduate school and interned on an AI-related project. Most of their learning in this area came on the job at MIT Lincoln Laboratory, where they both started their careers in 2015, fresh from college — Brown with a bachelor's and master's in systems engineering and Helus with a bachelor's in applied mathematics and economics (she later earned her master's in statistics through the Lincoln Scholars Program). To acquire a foundational understanding, they enrolled in online courses and tutorials, read research papers and books, and gained hands-on experience working on research programs for sponsors. At that time, AI was much less specialized and thus more accessible. They applied what they learned to leverage ML advances in robustness and explainability for ballistic missile defense applications.

"We sought to understand if and how AI systems might fail and how different conditions during training and testing could hurt performance," explains Brown, who moved to the Technology Office's AI Technology Group when it was established at the Laboratory in 2019.

"We came in at a time when AI and ML were starting to explode, and the DoD [Department of Defense] was excited about the potential," adds Helus, who joined the AI Technology Group earlier this year.

Though AI and ML — the former referring to the general science of machines mimicking human thinking and behaviors, and the latter a subset focused on training machines to automatically learn from data — were first introduced in the 1950s, work in these fields has grown exponentially in the past decade. Increases in computing power, the development of new algorithmic techniques, and the widespread availability of large datasets for training have all contributed to this renewed interest. But this renewed interest needs to be met with caution.

"This kind of technology has its limitations," Helus continues. "We had to help make decision makers aware that it's not some silver bullet capable of solving any problem. We must be careful about the way we engineer AI and ML systems. A lot of basic education from the ground up was involved."

For example, not every task is best suited for AI. Though computers excel at certain well-structured, predictable, and repetitive tasks like image classification and board games, other less-structured, creative tasks are still better executed by human experts. If a problem is appropriate for AI, large datasets with enough variation to represent the problem are necessary to properly train the ML models. And even if a model performs well with one dataset it was trained on, this performance does not necessarily generalize to new data. Vigorous testing prior to deploying systems for operational use is critical to building confidence that the model will work on future unseen data.

"The DoD space is very different from that of industry," explains Helus. "We don't often have the huge troves of data like a Google or Facebook, where users are cooperatively providing those data. We're also operating in a safety-critical environment, so the stakes are much higher and the tolerance for mistakes much less."

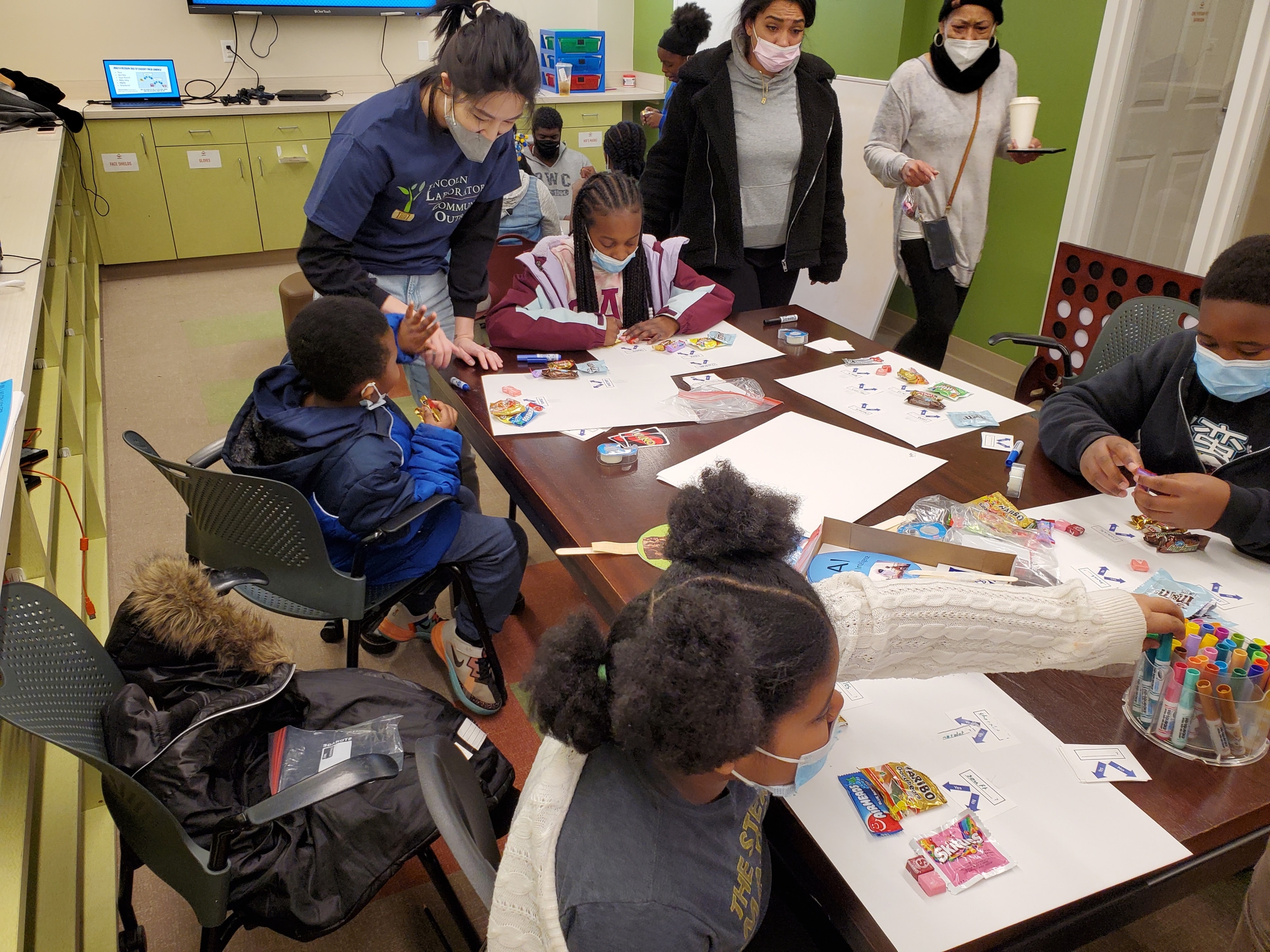

Because their work has always included this educational component, Brown and Helus saw an opportunity to reach an even wider — and younger — audience. Through the Laboratory's Girls' Innovation Research Lab (G.I.R.L.), they have been leading workshops teaching girls and young women about fundamental AI/ML concepts and concerns surrounding safety and ethics. This outreach program inspires girls, many of whom are from underprivileged communities, to pursue careers in STEM. Over the past two years, they have led workshops at different schools and organizations in the larger Boston area: Hanscom Middle School, Brookview House, and Girls Inc. They also participated in a career panel at Pioneer Charter School of Science. While G.I.R.L. prioritizes serving a female demographic and representing women in STEM through a female-heavy staff presence at events, the program also holds co-ed events serving all underrepresented populations.

Previous G.I.R.L. workshops had been offered on related topics like programming and robotics, but Brown and Helus noted a gap. While students today are exposed to AI/ML earlier in their education, with courses widely available in college and some even at the high school level, Brown and Helus are concerned. As the technology becomes more ubiquitous, people may take it for granted without questioning how it works and whether or not we should be using it for a particular application. The rising popularity of "code-free coding," in which you drag and drop boxes according to what you want the code to do, is making people even further disconnected from the math underlying AI/ML systems.

That's why screens are not part of their AI/ML workshops. Instead, the workshops engage students in hands-on activities illustrating high-level concepts and in thoughtful discussion about the benefits and perils of the technology. The curriculum borrows MIT Media Lab–developed course content geared toward middle schoolers. Brown and Helus condensed this content to fit within two hours and supplemented it with some of their own content. Because participants have spanned elementary through high school, Brown and Helus have since adapted the curriculum to be age appropriate. During the pandemic, they shifted the workshops to a virtual format, with activity kits shipped to the students. A couple months ago, the workshops returned to an in-person setup.

First, participants are introduced to the three parts of AI: data, learning algorithms, and prediction. Brown and Helus walk them through examples of each of these aspects for different applications, such as Netflix's recommender system providing personalized show and movie suggestions for viewers. Then, the students play an "AI or not" game in which they are presented with various technologies such as a Snapchat photo filter, classic car, remote-controlled robot, and toaster, and are asked to hold up a supplied paddle displaying the affirmative on one side and negative on the other. To understand the concept of an algorithm, specifically a popular type called a decision tree, the students sort different items such as candies. They decide which characteristics of the candies (e.g., wrapper color, ingredients, size), which serve as the "branches" of the tree, are important for classification.

Brown and Helus then delve into mistakes that AI makes, illustrating historical biases that may be present in our available data today. They refer to instances such as how the first page of results from Googling images of "physicist" shows only men and how translating "She is a doctor; he is a nurse" to a foreign language and then back to English modifies the sentence to "He is a doctor; she is a nurse." These references prepare the students for a group discussion on AI ethics. At the end of the workshop, students are asked how they would use AI for good and are introduced to some female engineers and scientists working in the field. Feedback has been positive, with many students asking for follow-on workshops delving more into the mathematics.

"Our goal is to expose students to a new STEM-related topic and show them that there are women and underrepresented minorities working in this space," says Brown. "We want them to be inspired to consider AI as a field they can go into, whether as a computer scientist, mathematician, or lawyer. Representation and diversity in AI are critical to ensuring future technology benefits all people."

"It's empowering for students to see role models and recognize that they can contribute too," says Helus. "What we really want is AI literacy for everybody. Often, a technology like this harms underserved and marginalized communities the most. For example, facial recognition algorithms are less accurate for people of color. AI literacy should be accessible for all, even if you aren't going into STEM, because the technology is part of our daily lives. It does everyone good to understand it and its limitations so we can advocate for ourselves and others."

From Google Search and facial filters to autocorrect, Netflix recommendations, and email spam filters, AI is busy at work behind the scenes. The pervasiveness of the technology in society and thus the numerous opportunities to make an impact are a big part of what drew Brown and Helus into the field.

Today, Brown continues her work to ensure that ML systems are reliable, stable, and resilient. In one project, under the Department of the Air Force – MIT AI Accelerator, she is building tools to evaluate and enhance the robustness of ML models for various Air Force applications, including weather forecasting. "What kinds of data corruptions might they encounter?" asks Brown. Through this project and a related project internally funded by the Laboratory, Brown is part of a team who assembled a responsible AI toolbox for training models against different kinds of perturbed, or corrupted, data. Brown is now interfacing with various stakeholders to incorporate this toolbox into their test and evaluation pipelines. In a third project, also internally funded by the Laboratory, Brown is looking at ways to detect that a ML model has been fooled during training. Brown is also collaborating with Helus and others to help organize courses for the Laboratory's Recent Advances in AI for National Security workshop in November 2022.

For Helus, AI education continues to be a major component of her work. Through a different AI Accelerator project, she analyzed gaps in the content for a comprehensive AI 101 course for the military. This summer, she is assisting colleague Ryan Soklaski teach Autonomous Cognitive Assistance (Cog*Works), which is offered at the MIT Beaver Works Summer Institute. Another research thrust for Helus is AI ethics, where, for example, she's interested in evaluating bias in AI system recommendations given definitions of fairness. Helus' third research thrust is knowledge-informed ML, or embedding human semantic knowledge and common sense into ML systems so they can better process high-level instructions and reason about their environment in a human-aligned way. It's this kind of reasoning that sends us downstairs to the kitchen when someone tells us to "Go get an apple," for example.

"Enabling such implicit reasoning and contextual understanding is a cool problem," says Helus. "The direction we see this moving long term is connecting this capability with an advanced perception system, which we like to call 'hooking together brain and eyes'—in other words, enabling a robot to process a high-level instruction and navigate efficiently to complete the task."

Working at the forefront of AI and ML, Brown and Helus see firsthand how rapidly research is advancing. Continued education – among adults and children alike – is critical to ensuring AI literacy for all.

Inquiries: contact Ariana Tantillo.