Power consumption signals help reveal counterfeit parts

A common problem for system developers who use commercial off-the-shelf (COTS) components is the very real possibility that these components are not what they purport to be. Occasionally these components are counterfeit, seemingly authentic but containing flaws that could sabotage the larger system. For example, in 2011 the U.S. Navy and Boeing discovered a defective ice detection system on a new P-8 Poseidon aircraft. Engineers traced the defect back to small devices within the system that had been sold as "new" but were in fact recycled, a common counterfeit practice. Ice detection systems are critical — they alert pilots when ice is present on an aircraft's control surfaces — and deployment with a faulty system could have led to mission failure and even lost lives. This example illustrates the threat of counterfeit devices finding their way into Department of Defense (DoD) systems and compromising missions.

To help address this issue, a team at Lincoln Laboratory has been developing a technology called Side-Channel Authenticity Discriminant Analysis (SICADA), which uses power consumption information to identify counterfeit COTS parts.

"Side-channel analysis attempts to learn about a device's operation through monitoring unintended signal emission," said Michael Vai, a senior staff member in the Secure Resilient Systems and Technology Group. One type of unintended signal emission is power consumption. When a device is powered on or off, or when individual components in a device are activated and shut down, the resulting change in power usage can be identified and then used to understand the inner workings of the device.

SICADA adapts and customizes side-channel analysis techniques to characterize parts, identify variations among them, and then compare the variations with a model of a legitimate part. The parts with negligible variations are given a passing grade while those that point to counterfeit activity fail.

"There are several different types of counterfeit parts," said Eric Koziel, who is also involved in the research. "Some parts are manufactured by authentic entities but have different quality assurance standards from what you're expecting. Others are manufactured at an unknown foundry, and you don't know what's in them [the parts] or what quality standards they conform to."

Koziel and Vai, along with Kate Thurmer of the Quantum Information and Integrated Nanosystems Group, developed the SICADA methodology by first creating "golden models" of several commercially available, authentic field-programmable gate arrays and microcontrollers — two COTS devices used often in DoD systems. These models are representational datasets, based on empirical measurement data, of how a "good" FPGA or microcontroller should function.

Using these models, the team demonstrated that they could compare unverified parts to their respective golden models by using support vector machines (a machine learning method often used for classification) to determine whether and how they differ. In the end, the researchers determined that certain differences from the golden models could indicate the part is counterfeit.

One key aspect of the SICADA methodology is that the threshold for labeling a part as counterfeit can be changed on the basis of the requirements for the mission or task. "For example, some missions might require that a part have a specific date code for consistency," said Koziel. "Counterfeiters could potentially try to relabel the same parts from different date codes just to meet the requirement. In such a case, you can adjust the SICADA thresholds to be much tighter and thus should be able to distinguish parts from different date codes." On the flip side, some missions may allow more "borderline" parts to be used, and SICADA can be recalibrated to allow those to pass through.

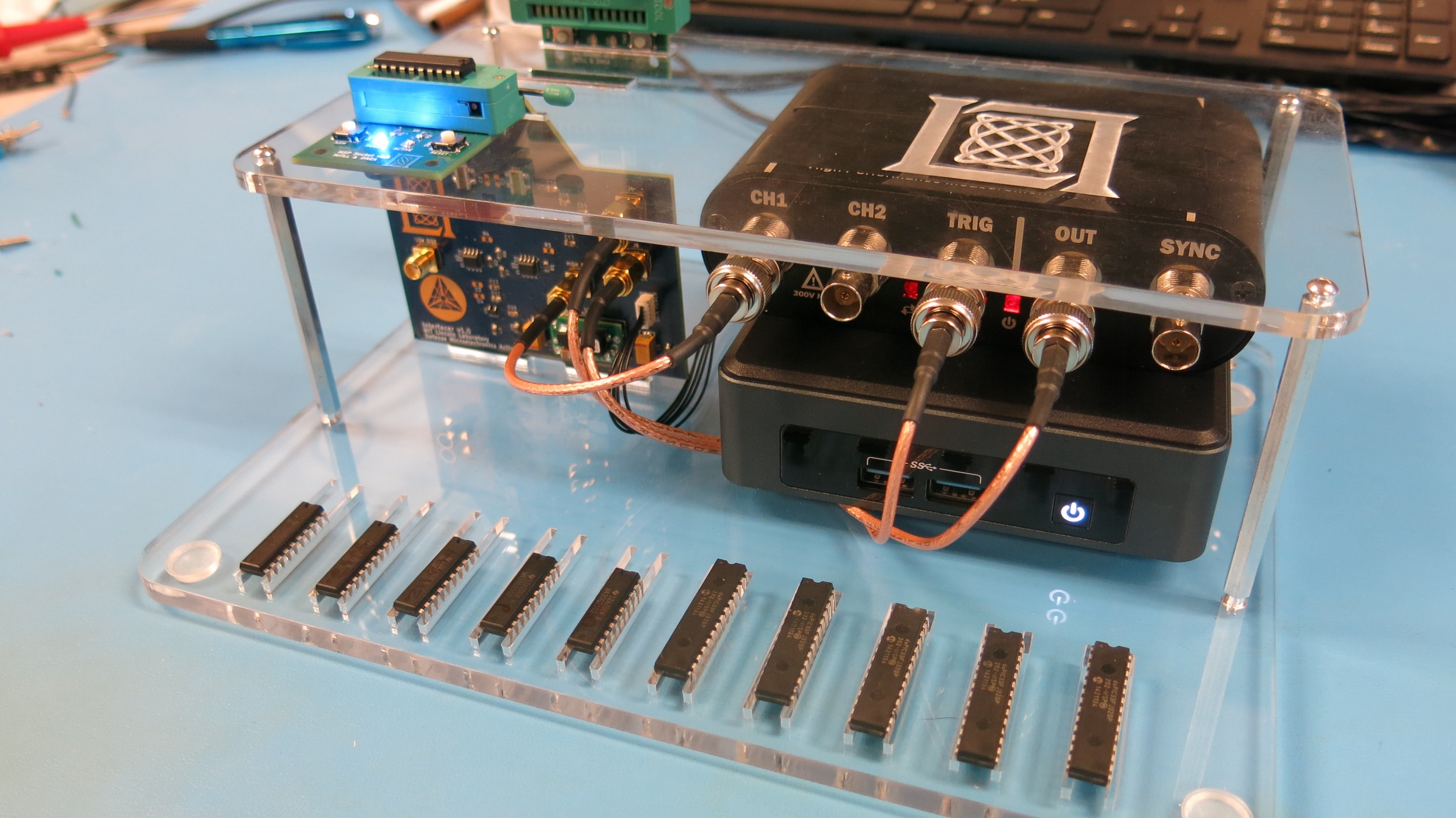

The equipment used to implement SICADA is all standard laboratory equipment, making a portable unit easy to create and cost-efficient. In October, the team demonstrated SICADA's abilities in a compact prototype unit, and they plan to transition a copy of the portable device to their sponsor for further demonstrations and testing.

"We're now working with the Engineering Division to fabricate more complete units," said Koziel. "We have the algorithms behind everything we need to do, but there isn't a commercial platform that will support this kind of testing yet."

In addition to mission assurance for the DoD, the SICADA methodology could help researchers monitor a device's wear and tear in general. It can also be used to help researchers conduct foundry-of-origin studies, which could link certain measured characteristics of a part to a specific manufacturing process or instrument that a known fabrication facility uses — thus identifying the part's origin.

"SICADA has potential in mitigating other vulnerabilities, such as malicious changing of the manufacturing process so that the device is fully functional but with reduced reliability," said Vai. "We look forward to opportunities for this and other technology advancement in securing our nation's electronic supply chain."