A World Leader in Interactive Supercomputing

When Lincoln Laboratory researchers were developing ACAS-X, a new collision-avoidance system for airplanes, they needed the ability to run large-scale simulations to prove that the system would respond correctly time and again. Such simulations would require powerful processors to mimic what ACAS-X would need to do: detect and track aircraft, assess the aircrafts' potential to collide, and then issue advisories to pilots to prevent a collision. Researchers turned to the Lincoln Laboratory Supercomputing Center (LLSC) to simulate more than 58 billion aircraft encounters for ACAS-X to run through. For perspective, it would take 100,000 years to run 58 billion one-minute encounters in real time. These simulations were crucial to validating the system, which is expected to be installed in more than 30,000 passenger and cargo aircraft worldwide.

Increasingly, Laboratory research is requiring powerful processors to help answer big questions. How can we predict weather events precisely, or teach autonomous systems how to behave, or train machine learning algorithms to find patterns in data? The way forward is paved with supercomputers, their thousands of processors running huge calculations in parallel, like lanes running down a highway.

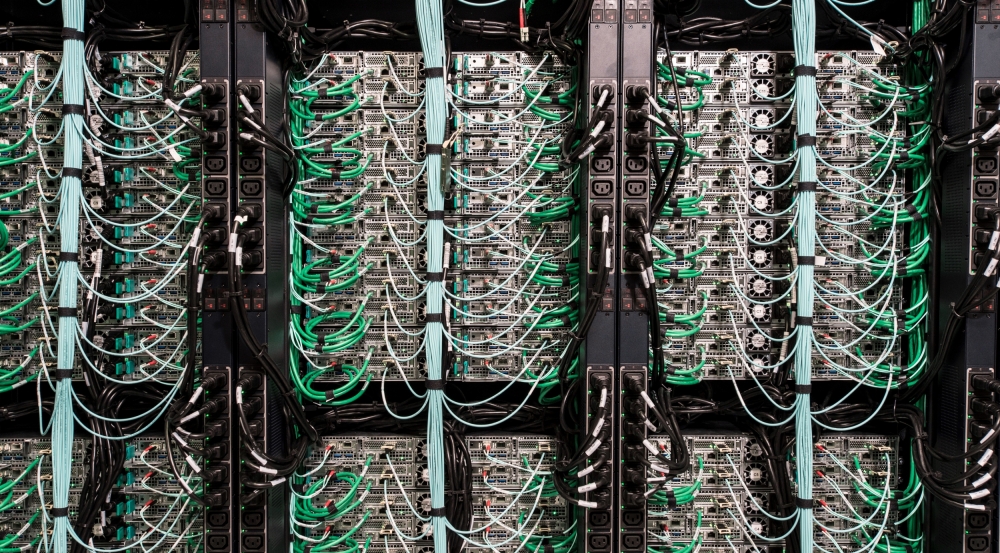

The LLSC is driving these systems. It is the largest interactive, on-demand supercomputing center in the world. It encompasses several supercomputers, including TX-E1, which supports collaborations with MIT campus and other institutions, and TX-Green, the flagship system and largest and most powerful one. These systems use parallel computing to deliver fast computational results. In simple terms, parallel computing is breaking tasks into smaller pieces and executing those pieces at the same time, each on separate processors that have been networked together into a computing cluster.

Together, these systems support more than 1,000 users across the Laboratory and MIT community.

Learn how supercomputing is moving R&D forward

Gaining interactive superpower

LLSC users are scientists, engineers, and analysts who need to solve problems that are too large and complex to be run on their desktop computer. Yet using a supercomputer isn't as simple as using a desktop — it requires understanding complex parallel programming techniques to optimize an algorithm for the system, for example. To enable staff to use supercomputers without first becoming parallel processing experts, LLSC engineers created software and tools that make interacting with supercomputers just as user-friendly and accessible as computing on a desktop system. The software does the work; researchers can simply submit their problems using techniques they're familiar with and get answers fast.

This interactivity has its roots at Lincoln Laboratory. In 1956, Laboratory engineers built the TX-0 computer, not only the world's first transistor-based computer but also the first interactive high-performance computer. The TX-0 featured a light-pen and monitor that enabled operators to input data into programs in real time and watch the results. A few years later, PhD student Ivan Sutherland used the TX-2 computer to run his software Sketchpad — the first computer program to utilize a graphical user interface. These first steps led the way into a new interactive-computing frontier.

More than 50 years after TX-0, Lincoln Laboratory researchers used the TX-2500 supercomputer to run the largest single problem ever run on a computer, and hundreds of staff were tapping into this powerful system through the interactive tools of the Laboratory's first supercomputing center, LLGrid. One of these tools, pMATLAB, let researchers easily convert their serial MATLAB code (used for developing algorithms, doing simulations, running data analytics) to parallel code that could then run on TX-2500's parallel processors.

Supercomputers had become an integral resource to staff's R&D efforts, and in 2016, the LLSC was established to accommodate the community's growing needs. That same year, Dell EMC upgraded the LLSC's TX-Green system into the most powerful supercomputer in New England and the third most powerful at a U.S. university.

Real outcomes in hours

The computational capabilities of the TX-Green have made staggering simulations, such as the 58 billion performed for ACAS-X, possible to achieve quickly — simulations that would have taken a week on the prior system are done in just hours. With its 41,472 Intel advanced processor cores, the TX-Green has the ability to perform one quadrillion floating-point operations per second. But it's not just processing power making the system efficient – the LLSC's on-demand grid scheduling software selects the best hardware and software for the job to ensure that users meet their deadlines. Staff are leveraging these enhancements in diverse research areas.

One team is harnessing the power of the LLSC and machine learning algorithms to uncover patterns in massive amounts of data — such as 10 years' worth of medical data from an intensive care unit. Staff were able to conduct data analysis tasks two to ten times faster than possible before to find patients who had similar respiratory waveforms, for example. These insights can help doctors improve diagnoses and prescribe better treatments.

Machine learning applications are also helping pilots fill gaps in their view of weather conditions. Laboratory staff made extensive use of LLSC resources to build the Offshore Precipitation Capability, a tool that generates radar-like images of precipitation in airspace where radar coverage is not available. The LLSC was used to store large amounts of satellite, lightning, and numerical weather data, to train the tool's machine learning model, and finally to fuse the data into simulated radar images. The Offshore Precipitation Capability is used today by air traffic controllers and pilots to plan offshore flight routes.

Every day, researchers across Lincoln Laboratory and MIT use the LLSC to carry out important work. Models connecting billions of neurons to help us understand brain disorders, simulations to test the effectiveness of a subsurface barrier in absorbing seismic waves, images processed from dense ladar data to locate disaster survivors — these are just a few of the LLSC's latest outputs. At the start are inputs from users with forward-thinking ideas, ones that need processing power to drive them into tomorrow's solutions.

Super green computing

When users at the Laboratory log into their LLSC account to submit a job to TX-Green, they are actually connecting to a data center situated 90 miles away in Holyoke, Massachusetts, a former textile-manufacturing town on the Connecticut River. Today, this river runs through a hydroelectric dam in Holyoke, producing cheap, clean power.

A system such as the TX-Green needs to consume megawatts of electrical power around the clock. Pondering how to someday host such a demanding system at the Laboratory, LLSC engineers brought the idea of a new computing infrastructure in Holyoke to a consortium of Massachusetts universities and technology companies. As a result, the Massachusetts Green High-Performance Computing Center (MGHPCC) was established in 2012 in Holyoke. The LLSC systems are deployed in an energy-efficient building powered by a combination of hydroelectric, wind, solar, and nuclear sources.

The end result is that Lincoln Laboratory has both an extremely powerful computing resource supporting R&D efforts and an extremely green center — the computers in the LLSC run 100 percent carbon free.