Transparency by Design Network

Understanding how artificial intelligence (AI) formulates decisions has been a long-standing challenge for developers of AI systems. Researchers at Lincoln Laboratory are addressing this challenge by exploring neural networks, an AI technique that tries to produce human-like reasoning by emulating the complex interconnections among neurons in the human brain. Their goal is to make a neural network’s “thought process” transparent to developers.

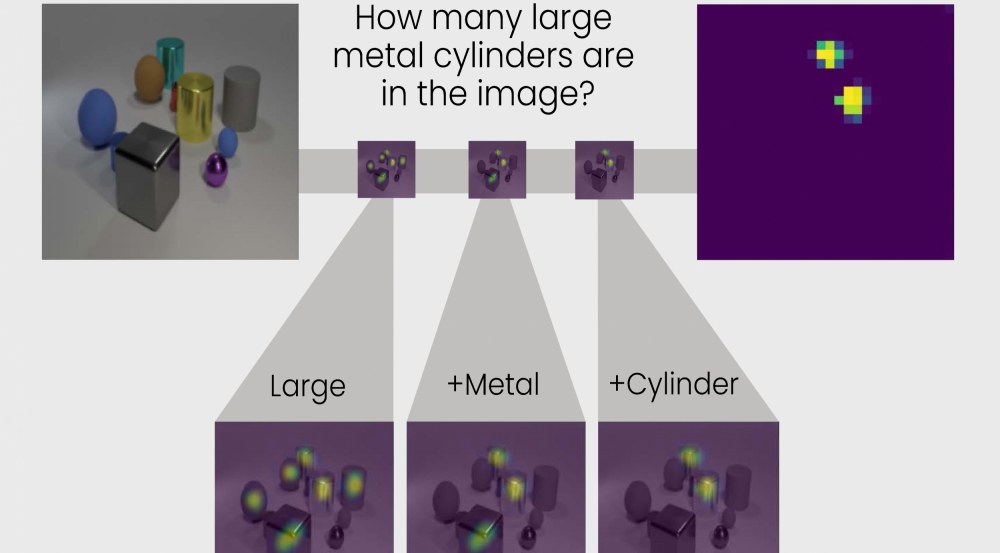

Focusing on neural networks used by applications that characterize objects in images, our research team is breaking down the computer reasoning into subtasks, each of which is handled by a separate module in the system. For example, if the computer is tasked to find large metal cylinders in a picture, the network goes through a series of searches — find all large items, find all metallic-looking objects, find cylinders — to acquire the answer. In our Transparency by Design Network (TbD-net), each module's answer is depicted by visually highlighting relevant objects. These visualizations let a human analyst see how a module is interpreting the traits (size, reflectivity, shape) of objects in the image.

TbD-net, evaluated on a dataset of 70,000 images and 700,000 questions, has set the new state-of-the-art performance standard for visual reasoning systems. Moreover, the insight into how the system arrived at its conclusions can lead to that elusive understanding of AI processes — knowledge that can enable new systems users can trust to make correct decisions.

For more information on this work, see the conference paper Transparency by Design: Closing the Gap Between Performance and Interpretability in Visual Reasoning.