Explainable Artificial Intelligence for Decision Support

Why does an AI system predict a patient has a certain disease, forecast a particular storm intensity over a region, or match a job seeker with a high-paying position? The explanations to such critical predictions are likely to have an impact on ethics, law, health, and safety. However, AI systems are considered black boxes, where users can see inputs and outputs but not the inner workings. This lack of transparency makes it inherently challenging to understand how and why they reach their decisions. For human operators to fully trust AI systems, these explanations must be aligned with human perception and expectations. Yet current explanation techniques tend to be untrustworthy.

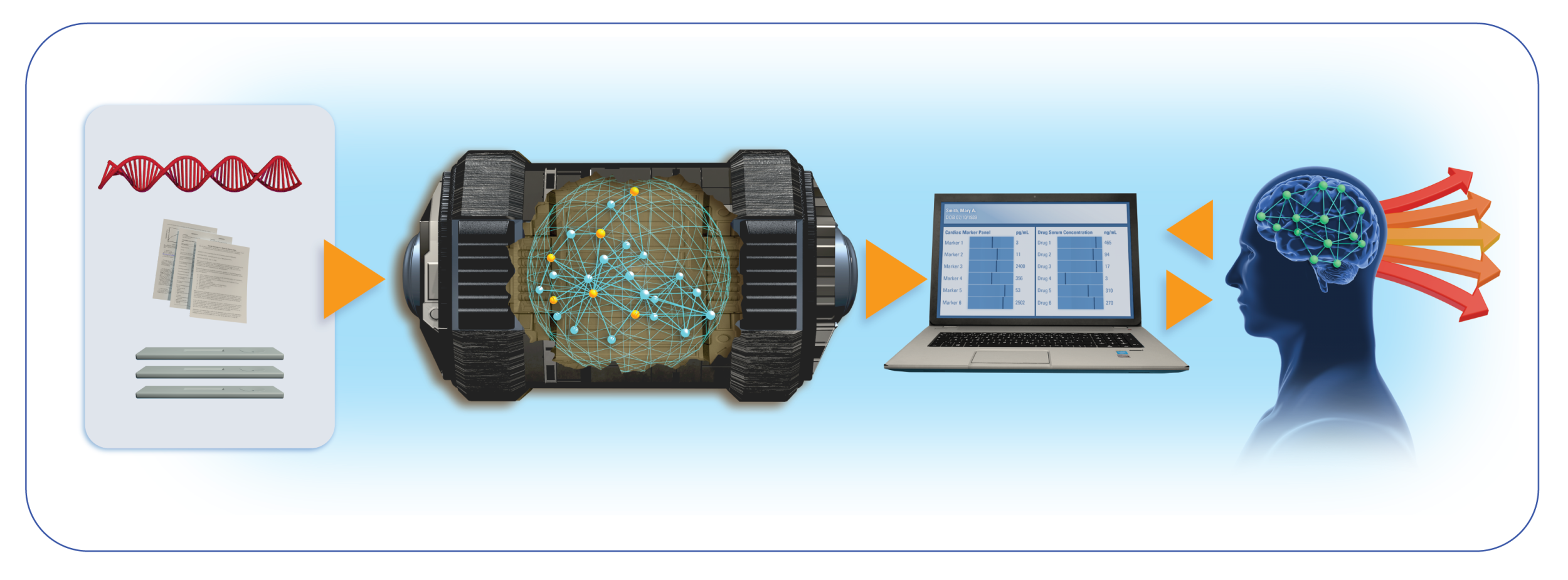

Through the Explainable Artificial Intelligence for Decision Support project, funded by the U.S. Air Force-MIT AI Accelerator, we aim to build explainable machine learning (ML) models and understand their role in operational workflows to improve human-AI collaboration. We are pursuing fundamental research in explainable AI from both ML and human-computer interaction perspectives and developing tools for improving explainability in Department of War case studies. These case studies include generating synthetic weather radar maps with high confidence levels for flight planning, highlighting important visual features associated with disease in medical images, and designing interpretable user interfaces for cyber vulnerability assessments. Our research spans developing techniques to detect instances when AI fails, exploring dataset bias and spurious correlations, creating human-friendly explanation frameworks, and designing user interface concepts.

We are also collaborating with the AI Systems Engineering and Reliability Technologies project team to develop an open-source toolbox for explainable AI. This toolbox will enable researchers, data scientists, and developers to improve the explainability of ML models, understand failure modes, detect biases, and debug predictions.