Undersea Vision-Based Navigation and Pose Determination for Human-Robot Teaming

A key challenge to effective human-robot teaming is determining the robot’s pose, or position and orientation. For example, if several robots were to search underwater along with a diver, the robots would need to guide the diver to an object of interest by sharing their relative pose.

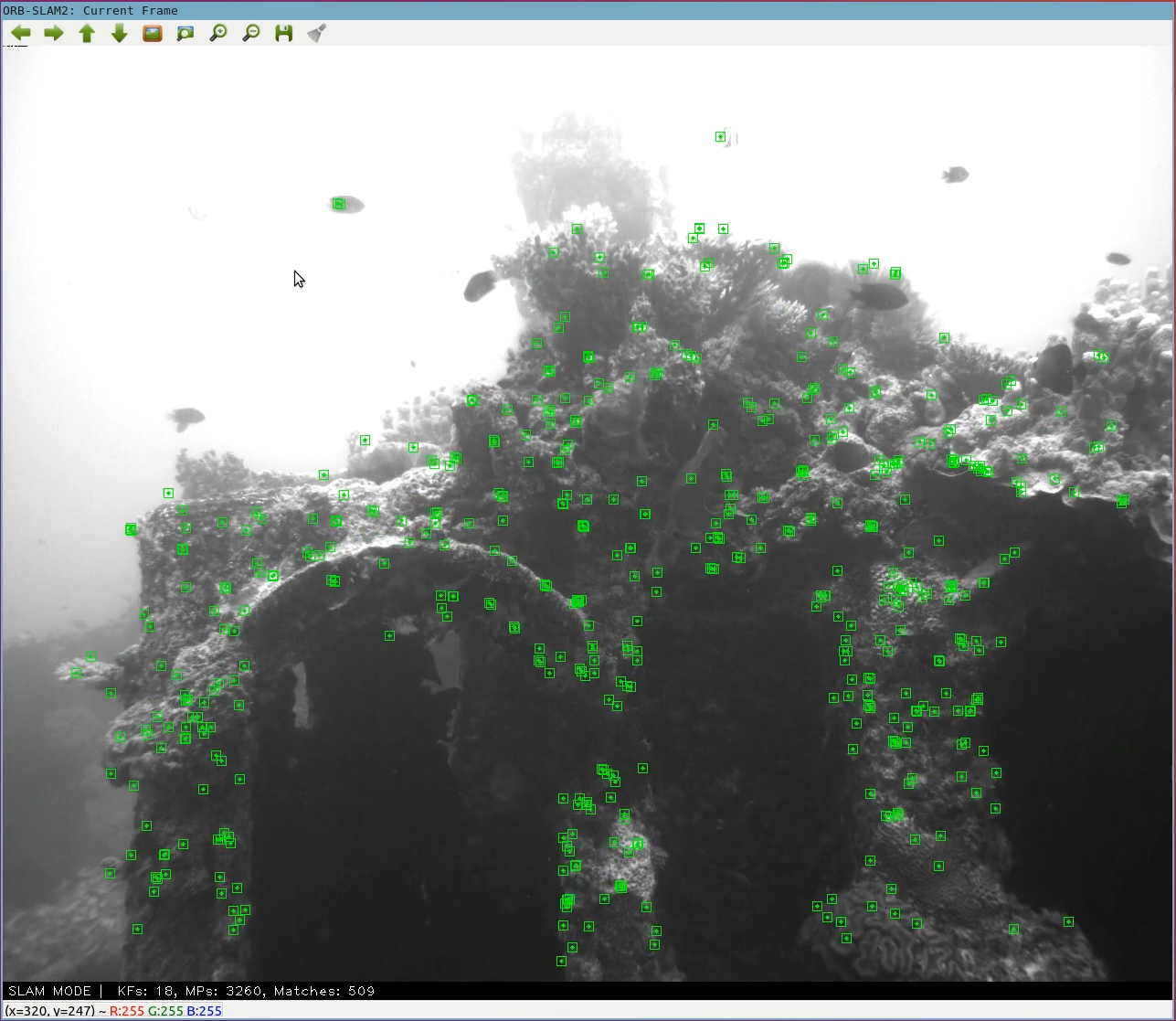

Under sponsorship of the Office of Naval Research (ONR), Lincoln Laboratory researchers prototyped a first-of-a-kind navigation, mapping, and pose determination system based on computer vision. Called the Undersea Mapping Rig, the system runs simultaneous localization and mapping (SLAM) algorithms in real time, without the need for any post-processing, on a small, power-efficient, high-performance NVIDIA Jetson embedded computing device. These SLAM algorithms build 3D maps from a single camera (monocular vision) moving through an unstructured underwater environment.

The Laboratory team demonstrated the Undersea Mapping Rig in the Pacific Ocean, off the coast of the Laboratory's Kwajalein Field Site, by traversing a wreck and computing pose in real time. The future concept is to package this system into a helmet-mounted unit easily worn by divers or to incorporate it onto a small autonomous underwater robot. This effort is part of a larger ONR-funded basic research program in collaborative robotics.